Developing a FastAPI Application for File Sharing - Part 1

This is a tutorial for building a WEB application for sharing files, very useful in cases where there is no access via SSH. The application will be developed using the minimal FastAPI project template , which will save a lot of time and effort as we will start from a ready-made basic project structure.

Introduction

Imagine the following situation: you need to transfer a file to or from a server but you do not have SSH access and therefore cannot use scp or rsync. Setting it up SSH may be possible, but it's a lot of work for an one-time transfer.

One solution is to use some cloud storage to upload and download the file via a REST API. But instead of using DropBox, Google Drive or something similar, let's develop our own FastAPI application.

Specifications

The application must have the following characteristics:

- The response to a successful file upload is the URL to download that file.

- Each file has a unique download

URL, regardless of the file name or content. - Uploading and downloading are anonymous. No authentication is required.

- The maximum size of an upload is 5 MiB.

- Files are automatically deleted after 60 minutes.

- The maximum number of uploads is 5 per hour per IP.

- There is no limit to the number of downloads.

- The file can be deleted manually calling the download URL with a DELETE method.

Technical Detail

Endpoints

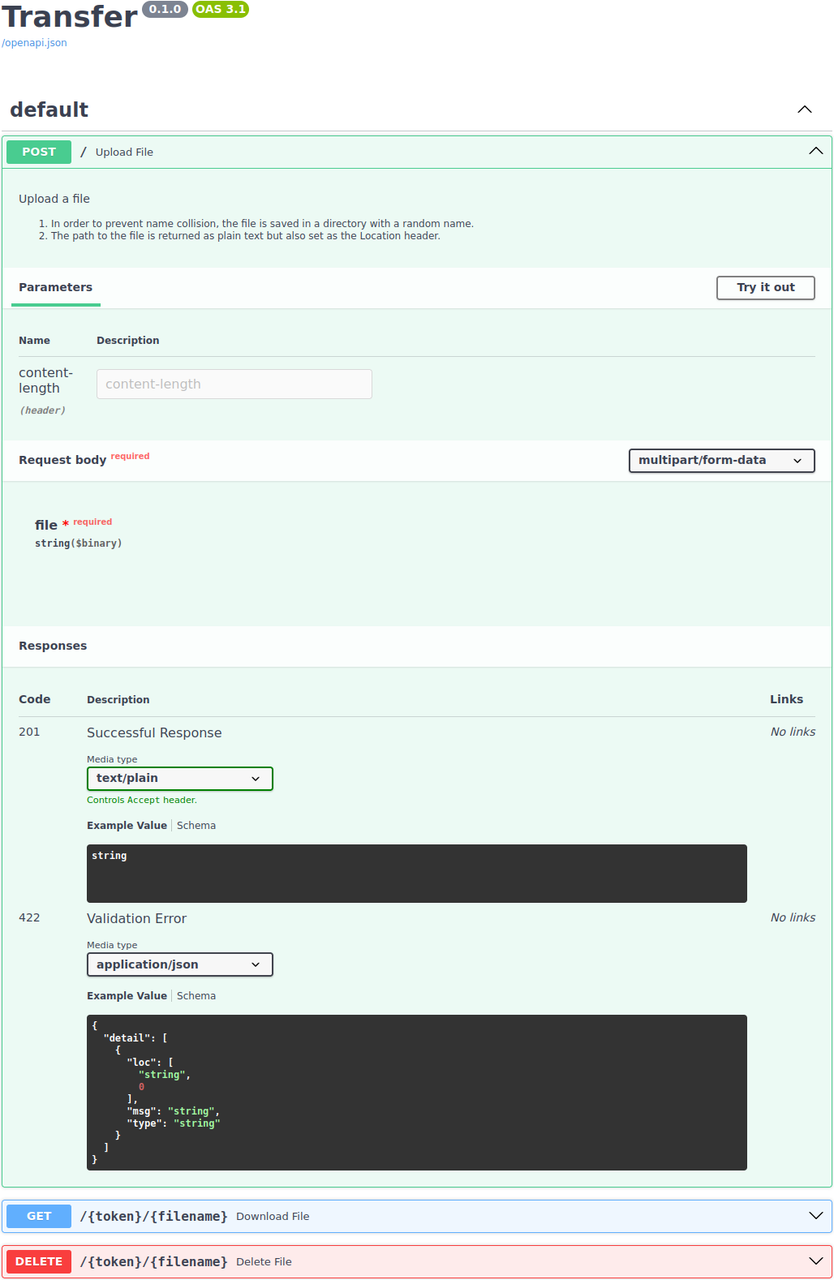

We will need three endpoints: one for upload, one for download and one for delete. For the download endpoint, we will create a path in the form /{token}/{filename}, where token is a random string and filename is the name of the original file:

| Method | Path | Action |

|---|---|---|

POST |

/ |

Upload a file |

GET |

/{token}/{filename} |

Download a file |

DELETE |

/{token}/{filename} |

Delete a file |

Considering https://transfer.pronus.xyz as the application address, the command to upload a file named /tmp/numbers_123.txt using HTTPie would be:

$ http --form POST https://transfer.pronus.xyz file@/tmp/numbers_123.txt HTTP/1.1 201 Created ... Location: https://transfer.pronus.xyz/kCLJQz5vxmE/numbers_123.txt ... https://transfer.pronus.xyz/kCLJQz5vxmE/numbers_123.txt

To download the file, just use the Location header returned in the upload response:

$ http https://transfer.pronus.xyz/kCLJQz5vxmE/numbers_123.txt HTTP/1.1 200 OK Content-Type: text/plain; charset=utf-8 ...

And to delete the file:

Redoing the same commands with curl, we would have:

Storage

In a commercial application, the files would be stored in a cloud storage service such as S3 or Google Cloud Storage. But for the sake of simplicity, we will use the server's own file system.

The storage directory will be configured through an environment variable called UPLOAD_DIR. We are going to create subdirectories named after the tokens and each of these subdirectories will contain only the uploaded file, with the same name as the original file.

For example, if the uploaded file is numbers_123.txt, the token is kCLJQz5vxmE and the storage directory is /dev/shm/transfer, the file will be stored as /dev/shm/transfer/kCLJQz5vxmE/numbers_123.txt.

Removal Scheduling

As soon as a file is received and stored, a schedule is created for the file to be removed after 60 minutes. If the file is removed manually via a DELETE /{token}/{filename}, the schedule must be cancelled.

We will use APScheduler to control schedules as it is a simple solution that does not require any other service or additional configuration for memory storage mode.

As schedules will only be kept in memory, both files and schedules will be lost in the event of a server failure or restart. That is acceptable for our case because the purpose of the application is only to provide a transient solution for file transfer and not to store files permanently.

Checking the Maximum File Size

The size of the file being downloaded is declared in the header Content-Length. However, this information is not reliable as it can be easily faked. Another additional check is required to ensure the file does not exceed the maximum size.

Upload Rate Limit per IP

We will limit the number of uploads to prevent abuse. As access is anonymous, the application will not have a username and password database. Identification will be done by IP, with a limit of 5 uploads per hour per IP.

Although it is possible to carry out this verification through the application, it is better to leave this task to the web server as it is more efficient and simpler to implement.

Instantiation of the Project Template

Let's start by instantiating the minimal FastAPI project template. The best way is using pipx:

$ pipx run cookiecutter gh:andredias/perfect_python_project \ -c fastapi-minimum [1/8] author (): André Felipe Dias [2/8] email (): andre.dias@email.com [3/8] project_name (Perfect Python Project): Transfer [4/8] project_slug (transfer): [5/8] python_version (3.11): [6/8] line_length (79): 100 [7/8] Select version_control 1 - hg 2 - git Choose from [1/2] (1): [8/8] github_respository_url (): Creating virtualenv transfer in /home/andre/projetos/tutoriais/transfer/.venv Using virtualenv: /home/andre/projetos/tutoriais/transfer/.venv Updating dependencies ... Package operations: 66 installs, 0 updates, 0 removals • Installing certifi (2023.7.22) • Installing charset-normalizer (3.2.0) ... Writing lock file ruff --silent --exit-zero --fix . blue . reformatted transfer/main.py reformatted transfer/config.py All done! ✨ 🍰 ✨ 2 files reformatted, 8 files left unchanged. adding .dockerignore adding .github/workflows/continuous_integration.yml adding .hgignore ...

Next, we are going to install some additional dependencies: aiofiles, apscheduler and python-multipart as project dependencies, and types-aiofiles for linting. The latter is a development dependency and it should be installed in a separate group:

$ poetry add aiofiles=="*" apscheduler=="*" python-multipart=="*" $ poetry add --group dev types-aiofiles=="*"

The project directory structure looks like this:

transfer

├── Caddyfile

├── docker-compose.dev.yml

├── docker-compose.yml

├── Dockerfile

├── hypercorn.toml

├── Makefile

├── poetry.lock

├── pyproject.toml

├── README.rst

├── sample.env

├── scripts

│ └── install_hooks.sh

├── tests

│ ├── conftest.py

│ ├── __init__.py

│ ├── routers

│ │ ├── __init__.py

│ │ └── test_hello.py

│ └── test_logging.py

└── transfer

├── config.py

├── exception_handlers.py

├── __init__.py

├── logging.py

├── main.py

├── middleware.py

├── resources.py

└── routers

├── hello.py

└── __init__.py

Settings

In addition to the configuration variables inherited from the minimal FastAPI project, we will need to include the following variables:

-

BUFFER_SIZE: Buffer size used in manual file size checking. The default size will be 1 MiB. -

FILE_SIZE_LIMIT: Maximum file size. The default size will be 5 MiB. -

UPLOAD_DIR: File storage directory. The default directory will be/tmp/transfer_files. -

TOKEN_LENGTH: Size of the token used in the download URL. The default size will be 8 bytes . -

TIMEOUT_INTERVAL: Time interval in seconds for automatic file removal. The default interval will be 3600 seconds (1 hour).

The configuration file is:

Handling Downloaded Files

As we will have actions for POST, GET and DELETE files, we will also need functions to store, remove and check the existence of downloaded files. Implementing these functionalities directly on the endpoint would harm the testability and reusability of the functions. Therefore, we will group them in a separate module called file_utils.py.

Checking If A Downloaded File Exists

The function that checks if a file exists is:

File Removal

To remove a file:

from shutil import rmtree from . import config def remove_file(token: str) -> None: # noqa: ARG001 """ Remove a file and its parent directory """ rmtree(config.UPLOAD_DIR / token)

The comment # noqa: ARG001 is a directive for the linter to ignore that filename is declared but not used. filename is not necessary because the directory name is unique, defined by token. Since there is only one file per directory and the file will be removed, then just remove the directory with the file inside. However, the file name will be kept as a parameter to maintain consistency with the other functions.

Removing Expired Files

We will also need a function to remove expired files. That function will be called by APScheduler from time to time to guarantee that the storage will be cleaned periodically even if there is a server failure.

from time import time from . import config def remove_expired_files() -> None: """ Remove files that have been stored for too long """ timeout_ref = time() - config.TIMEOUT_INTERVAL for path in config.UPLOAD_DIR.glob('*/*'): if path.stat().st_mtime < timeout_ref: remove_file(*path.relative_to(config.UPLOAD_DIR).parts)

Storing Files

And finally, the most important function, the one that saves the file. It is more complex because during the saving process it is necessary to check the actual size of the file, as the size declared in the header Content-Length can be faked.

The purpose of Readable (line 10) is to define an interface for the read method that works for both UploadFile (from FastAPI) and aiofile, used in tests.

The filename is inferred from file (line 22). However, UploadFile has the attribute filename while aiofile has the attribute name.

token is generated using the token_urlsafe function from the secrets module (line 23). This function generates a random string of configurable length that can be used in URLs.

The actual file size is checked while saving the file in pieces (lines 29 to 36). The size of each saved part is counted and if the actual file size exceeds the limit, the saving process is stopped, the partially saved file is removed and an exception is thrown.

Unit Tests for File Handling Functions

Before moving on to the next part of the project, let's create tests for the file manipulation functions. We will use three tests:

- Test the file life cycle: creating, downloading, and removing a file.

- Test removing expired files.

- Test saving files that exceed the maximum size.

These tests will be in test_file_utils.py.

File Lifecycle Test

The idea here is to cover file creation, verification, and removal using the file_exists, remove_file and save_file:

tmp_path is a pytest fixture that creates a temporary directory for tests. So, we don't have to worry about cleaning up the storage directory at the end of each test.

The first check is for a file that does not exist (line 9). Next, we create a file that will be used for upload (line 10 and 11). This file needs to be passed through a parameter that allows asynchronous reading in binary mode, which is how UploadFile (FastAPI) works. That's why we're using aiofiles (line 12).

After the file is saved, we check if it exists (line 14). Finally, we remove the file and check that it no longer exists (lines 15 and 16).

Expired Files Removal Test

The test creates and saves a file (lines 8 to 11). The function that removes expired files is called (line 15) but nothing should change because not enough time has passed yet. Then the time is forged into the future (line 19), the remove function is called again (line 20), and this time the file should have been removed (line 21).

Large File Saving Test

The test ensures that a file that exceeds the maximum size is not saved:

The test starts by forging the maximum file size to 10 bytes (line 7). It then creates an 11-byte file (line 10) and tries to save it (line 13). As the file exceeds the maximum size, an OSError exception is expected to be thrown (line 12). If it is not, the test fails.

Test Execution

This is the state of the test execution so far:

(transfer) $ make test pytest -x --cov-report term-missing --cov-report html --cov-branch \ --cov transfer/ ========================= test session starts ========================== platform linux -- Python 3.11.4, pytest-7.4.1, pluggy-1.3.0 rootdir: /home/andre/projetos/tutoriais/transfer configfile: pyproject.toml plugins: alt-pytest-asyncio-0.7.2, anyio-4.0.0, cov-4.1.0 collected 8 items tests/test_file_utils.py ... [ 37%] tests/test_logging.py .... [ 87%] tests/routers/test_hello.py . [100%] ---------- coverage: platform linux, python 3.11.4-final-0 ----------- Name Stmts Miss Branch BrPart Cover Missing ------------------------------------------------------------------------ transfer/__init__.py 0 0 0 0 100% transfer/config.py 20 1 2 1 91% 11 transfer/exception_handlers.py 11 0 0 0 100% transfer/file_utils.py 37 1 12 0 98% 39 transfer/logging.py 25 0 8 2 94% 15->19, 30->32 transfer/main.py 14 0 2 0 100% transfer/middleware.py 33 2 4 0 95% 37-38 transfer/resources.py 21 0 4 0 100% transfer/routers/__init__.py 0 0 0 0 100% transfer/routers/hello.py 7 0 2 0 100% ------------------------------------------------------------------------ TOTAL 168 4 34 3 97% Coverage HTML written to dir htmlcov

Scheduler Initialization

Resource initiation and termination are done respectively in startup and shutdown functions in resources.py:

The scheduler is instantiated at the beginning of the module (line 12). In the function startup, the scheduler is started (line 27) and a schedule for the function remove_expired_files is added to run once per day (line 28). In the function shutdown, the scheduler exits (line 33).

Endpoint Creation

Now that we have file manipulation functions, we are going to create the endpoints in the routers/file.py. First, we need to change main.py to include the new router:

The changes are lines 9 and 16, which include of the router file. The router hello has been removed because it will no longer be used.

GET /{token}/{filename}

This is the simplest endpoint. Just return the file if it exists, otherwise return a 404 error:

To return the file, we use FastAPI's FileResponse class (lines 11 and 16).

DELETE /{token}/{filename}

The next endpoint removes downloaded files. If the file does not exist, the response is a 404. If it exists, the file is removed, the removal schedule is cancelled, and the response is a code 204:

Schedule removal is based on id, which will be set to {token}/{filename} when the file is saved and the schedule is created.

POST /

The last endpoint uploads a file. In the case where everything happens as expected:

- The file is saved

- A removal schedule is created

- The return code is201

- The file download URL is returned in

Locationheader, and also as a response to the request

If the file size exceeds the limit, the file is not saved and the return code is 413.

content_length (line 23) serves as an initial check of the file size, based on Content-Length header. The other check is done during file saving (line 38).

The file download URL (lines 52 to 59) depends on where the application is running. If it's in production, there will be a reverse proxy that will provide an X-Forwarded-Proto header probably containing https. The domain is obtained from the X-Forwarded-Host, which determines the domain originally used, or Host if the former is not available. Combining this information with the endpoint's path, token and filename, we have the complete download URL.

In the second case, the application is running locally (development or testing) and the download URL is assembled from the request URL. The answer will be something like http://localhost:5000/{token}/{filename}.

As recommended in the MDN documentation on response code 201 , we will return the URL of the created resource in both the body of the response message and in the Location header. To return a plain text, we use PlainTextResponse (line 18). Otherwise, FastAPI would return a JSON with the URL.

The endpoints are available in the routers/file.py.

Endpoint Tests

Now that the endpoints are ready, let's create tests for them in the test_file.py.

File Lifecycle Testing

The first test covers the entire lifecycle of a file. We are going to create a temporary file, upload that same file twice, download the file, remove the file, and try downloading the file again:

The file upload test must check the result of the request, the Location header and the response body (lines 20 to 23), whether the file was actually saved and whether a schedule was created (lines 24 to 26).

The second upload test uses the same file, but simulates a reverse proxy (line 33). The response must contain a different token than the first upload (line 36 and 37) but the same filename (line 38). The other upload checks are the same.

The download test checks whether the response is successful, whether the returned content is correct, and whether the schedule still exists (lines 44 to 48).

The removal test checks that the return code is 204, that the file was actually removed, and that the schedule was canceled (lines 50 to 54).

When trying to get or remove the file again, the return code should be 404 (line 56 and 62).

File Size Limit Test

Another different test will be created to cover the case where the file size exceeds the limit:

The test starts by changing the maximum allowed size to 10 bytes during the test (line 15). It then creates an 11-byte file (line 18) and attempts to upload it (line 21). The return code should be 413 (line 22).

On the second attempt, the file size is forged to half the maximum size (line 27). The result should be the same (line 29).

Test Execution

The test result is:

(transfer) $ make test pytest -x --cov-report term-missing --cov-report html --cov-branch \ --cov transfer/ ========================== test session starts ========================= platform linux -- Python 3.11.4, pytest-7.4.3, pluggy-1.3.0 rootdir: /home/andre/projetos/tutoriais/transfer configfile: pyproject.toml plugins: alt-pytest-asyncio-0.7.2, anyio-4.0.0, cov-4.1.0 collected 9 items tests/test_file_utils.py ... [ 33%] tests/test_logging.py .... [ 77%] tests/routers/test_file.py .. [100%] ---------- coverage: platform linux, python 3.11.4-final-0 ----------- Name Stmts Miss Branch BrPart Cover Missing ------------------------------------------------------------------------ transfer/__init__.py 0 0 0 0 100% transfer/config.py 20 1 2 1 91% 11 transfer/exception_handlers.py 11 0 0 0 100% transfer/file_utils.py 37 1 12 0 98% 39 transfer/logging.py 25 0 8 2 94% 15->19, 30->32 transfer/main.py 14 0 2 0 100% transfer/middleware.py 33 0 4 0 100% transfer/resources.py 27 0 4 0 100% transfer/routers/__init__.py 0 0 0 0 100% transfer/routers/file.py 42 1 16 1 97% 33 ------------------------------------------------------------------------ TOTAL 209 3 48 4 97% Coverage HTML written to dir htmlcov =========================== 9 passed in 0.49s ==========================

Manual Testing

To test the project manually, you first need to get the project running:

(transfer) $ make run ENV=development docker compose -f docker-compose.yml -f docker-compose.dev.yml up --build [+] Building 8.8s (16/16) FINISHED => [app internal] load .dockerignore 0.0s => => transferring context: 113B 0.0s => => naming to docker.io/library/transfer 0.0s ... [+] Running 2/2 ✔ Container transfer Recreated 0.1s ✔ Container caddy Recreated 0.0s Attaching to caddy, transfer caddy | {"level":"info","ts":1698771838.952902,"msg":"using provided configuration","config_file":"/etc/caddy/Caddyfile","config_adapter":"caddyfile"} ... caddy | {"level":"info","ts":1698771839.0067704,"msg":"serving initial configuration"} transfer | { transfer | "timestamp": "2023-10-31T17:04:01.684941+00:00", transfer | "level": "DEBUG", transfer | "message": "config vars", transfer | "source": "resources.py:show_config:39", transfer | "BUFFER_SIZE": 1048576, transfer | "DEBUG": true, transfer | "ENV": "development", transfer | "FILE_SIZE_LIMIT": 5242880, transfer | "PYGMENTS_STYLE": "github-dark", transfer | "REQUEST_ID_LENGTH": 8, transfer | "TESTING": false, transfer | "TIMEOUT_INTERVAL": 3600, transfer | "TOKEN_LENGTH": 8, transfer | "UPLOAD_DIR": "/tmp/transfer_files" transfer | } transfer | transfer | transfer | { transfer | "timestamp": "2023-10-31T17:04:01.687691+00:00", transfer | "level": "INFO", transfer | "message": "started...", transfer | "source": "resources.py:startup:29" transfer | } transfer | transfer | transfer | [2023-10-31 17:04:01 +0000] [9] [INFO] Running on http://0.0.0.0:5000 (CTRL + C to quit)

There are two testing options. One option is to interact with the Swagger interface, accessible at https://localhost/:

The second way is through the command line, using curl or httpie, as presented earlier in the technical details section.

Final Thoughts from Part 1

This article presented a tutorial on developing a web application for file sharing. We started from a project specification and created each necessary part until we reached a functional application, including the corresponding automated tests. There are still some details that will be covered in a future article, such as creating a static page with operating instructions and configuring the reverse proxy to deal with the limit of uploads per IP.

One of the objectives achieved was to show how to create a web application with FastAPI simply and quickly using the minimal FastAPI project template as a base. A lot of time and effort was saved because the basic structure of the application was already ready from the template, meaning it was only necessary to include the new project features.

Previous article: How to Create Structured and Traceable Logs in FastAPI Applications

Comments

Comments powered by Disqus